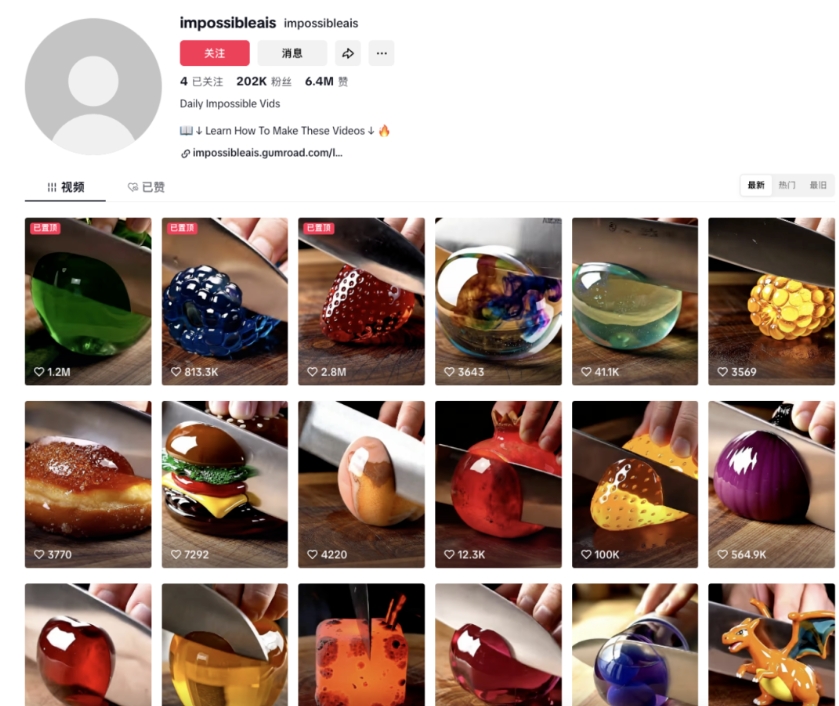

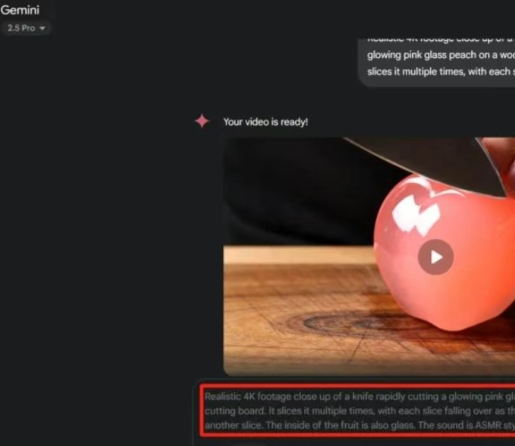

Recently TikTok, Xiaohongshu, youtube, set off a wave of "AI cut fruit ASMR" video craze:

A knife fell gently, and the crystal strawberries snapped and shattered with the crisp sound of breaking glass, relaxing the mind and body for a few seconds, theMillions of views, comments begging for the "original video."The

How exactly are these types of videos made? Which AI tool is used? Is it complicated? Is there a fee?

Today's post.Hands-on with Google Veo 3 Free ProductionThese kinds of videos, zero cost, zero editing basics, just do it 👇 (however, open the Veo 3 (Link requires point magic configuration)

✅ Step 1: Prepare the Prompt (Prompt)

The core of the generated video is text alertIt's like giving instructions to an AI:

"What kind of scene are you shooting, what objects are there, what shots, what sounds"

Don't make up your own cue words, the best way for newbies to do it:Copy, then change, then write.The

🧠 Example prompt word 1 (good for getting started quickly):

Realistic 4K footage close up of a knife rapidly cutting a glowing purple glass peach on a wooden cutting board. Each slice falls apart with a crisp ASMR- style glass shatter sound. style glass shatter sound.

👉 You just need to change "purple glass peach" to the desired fruit, e.g. glass mango / apple / lemon...

🧠 Example Prompt Word 2 (Premium + Multiple Perspectives):

Shot in extreme macro, a flawless, crystal-clear [fruit] rests on a wooden board under warm light. The knife slices it slowly with a clean " ting" sound. Reflections shimmer on the surface, ASMR-style audio layers blend gently in a quiet environment.

👉 [fruit] Replace the object you want to make, e.g. glass watermelon / diamond pineapple etc.

🔄 Quickly generate prompt words (AI help writing recommended):

Let DeepSeek / ChatGPT mimic these structures and make a template where one line of input outputs an entire prompt, for example:

Input: blue glass lemon

Output: a whole paragraph of ve o cue words

✅ Step 2: Generate Video with Veo 3

Veo is Google's latest Text to Video ToolIt has support for 1080P + ASMR sound + multi-angle shooting.

📍 Method 1: Gemini official website (easy to use)

Link: https://gemini.google.com

- Using the Gemini 2.5 Pro model

- Enter the prompt

- Click on the video button → wait for generation

📍 Method 2: Google Labs Flow (customizable)

Link: https://labs.google/flow/

- Switch model to: Veo 3 - Fast (Text to Video)

- Simultaneous generation of 1~4 videos with continuous frames and transitions

- More flexible point consumption and more parameters

🎬 Final advice: don't just play, make accounts!

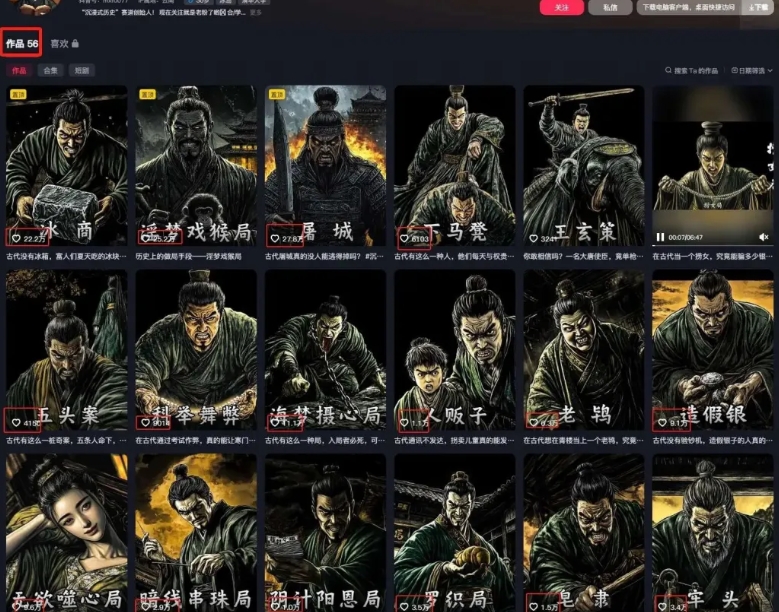

The ASMR fruit-cutting video isn't a "toy," it's a Traffic Codes + Content ModelsThe

You can make these kinds of videos like the TikTok pop-up bloggers doBatch Generation, Periodic Release, made into an exclusive account.

It is also possible to take the realization path:

- Packaging your generation experience, editing process → doing paid tutorials

- Sell finished materials → Hanging Taobao / Weishop

- Push AI Tools → Affiliate Commissions

These types of videos are easy to make, low-barrier and extremely relaxing, theGreat for short video platforms to post consistentlyThe